I’m at EPFL right now on my way back from a trip to India. My last work-related stop there was the Tata Institute of Fundamental Research, where I am working with Vinod Prabhakaran on some follow-up work to our ISIT paper this year on correlated sampling. TIFR has a lovely campus in Navy Nagar, right next to the ocean in the south of Mumbai. It’s a short walk across the lawn to the ocean:

The beach at TIFR, looking north to the haze-obscured skyline of Mumbai

The grounds themselves are pretty rigorously landscaped and manicured:

The main building seen across the lawn in front of the ocean.

Earlier in my trip I visited IIT-Madras, hosted by Andrew Thangaraj and Radha Krishna Ganti and IIT-Bombay, where I was working with Bikash Dey on extensions to some of our AVCs-with-delay work. It’s been a great trip and it’s nice to visit so many different schools. The challenges facing Indian higher education are very different than those in the US, and it’s a good change of perspective from my usual US-centric view of things. Maybe I’ll write more on that later.

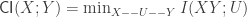

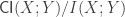

But for now, I wanted to get back to some actual technical stuff, so I thought I’d mention something that came up in the course of my discussions with Vinod today. For a joint distribution  , Wyner defined the common information in the the following way:

, Wyner defined the common information in the the following way:

One fun fact about the common information is that it’s larger than the mutual information, so  . One natural question that popped up as part of a calculation we had to do was whether for doubly-symmetric binary sources we could have a bound like

. One natural question that popped up as part of a calculation we had to do was whether for doubly-symmetric binary sources we could have a bound like

for some “nice”  . In particular, it would have been nice for us if the inequality held with

. In particular, it would have been nice for us if the inequality held with  but that turns out to not be the case.

but that turns out to not be the case.

Suppose  and are a doubly-symmetric binary source, where

and are a doubly-symmetric binary source, where  is formed from

is formed from  by passing it through a binary symmetric channel (BSC) with crossover probability

by passing it through a binary symmetric channel (BSC) with crossover probability  . Clearly the mutual information is

. Clearly the mutual information is  . For the common information, we turn to Wyner’s paper, which says

. For the common information, we turn to Wyner’s paper, which says  , which is a bit of a weird expression. Plotting the two for

, which is a bit of a weird expression. Plotting the two for  between 0 and 1/2 we get:

between 0 and 1/2 we get:

Mutual and Common Information for a DSBS

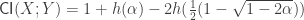

If we plot the ratio  versus

versus  , we get the following:

, we get the following:

Ratio of Common to Mutual Information for a DSBS

The ratio blows up! So not only is Common Information larger than mutual information, it can be an arbitrarily large factor larger than the mutual information.

It might seem like this is bad news for us, but it just made the problem we’re working on more interesting. If we get any results on that I might be less engimatic and blog about it.