A new postdoc here, Punyaslok Purkayastha, pointed out to me a Probability Surveys paper by Robin Pemantle on random processes with reinforcement, and I’ve been reading through it in spare moments (usually on the bus). I have had a problem knocking about my head that is related (Ram Rajagopal and I thought we could do in our spare time but we never managed to find enough time to work on it). All this stuff is pretty well-known already, but I thought it was a nice story.

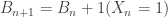

A Polya urn process starts by taking an urn with  red balls and

red balls and  black balls at time 0. For each time

black balls at time 0. For each time  , draw a ball uniformly from the balls in the urn and then replace it together with another ball of the same color. Let

, draw a ball uniformly from the balls in the urn and then replace it together with another ball of the same color. Let  be the sequence of colors that you draw, where red is 0 and black is 1. So with probability

be the sequence of colors that you draw, where red is 0 and black is 1. So with probability  we have

we have  . The urn is updated according to

. The urn is updated according to  and

and  .`

.`

To figure out the asymptotic behavior of this process, just note that the sequence of colors is exchangeable. If  has

has  1’s in it, then

1’s in it, then

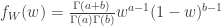

From this it is clear that the probability of seeing $x_1^n$ only depends on its type (empirical distribution, for non-information theorists). Since the sequence is exchangeable, de Finetti’s Theorem shows that the fraction of red balls  converges almost surely to a random variable

converges almost surely to a random variable  , and the limit of the formula above shows that the limit is a beta distribution with parameters

, and the limit of the formula above shows that the limit is a beta distribution with parameters  :

:

A more general process is the Friedman urn process, in which you add  balls of the same color you drew and

balls of the same color you drew and  balls of the opposite color. If

balls of the opposite color. If  then Freedman (different spelling, different guy) showed that the proportion of red balls tends almost surely to 1/2. This happens because the evolution ends up looking like a symmetric two-state Markov chain, which has (1/2, 1/2) as its stationary distribution. Note here that the limiting fraction is almost surely constant, as opposed to there being a limiting distribution, as in the Polya process.

then Freedman (different spelling, different guy) showed that the proportion of red balls tends almost surely to 1/2. This happens because the evolution ends up looking like a symmetric two-state Markov chain, which has (1/2, 1/2) as its stationary distribution. Note here that the limiting fraction is almost surely constant, as opposed to there being a limiting distribution, as in the Polya process.

Let’s look at a special case where instead of adding a ball of the same color that you drew, you only add a ball of the opposite color (which is a Friedman process with parameters (0,1)). Freedman’s result doesn’t apply here, but Kingman has a nice paper (which I am reading now) relating this process to a third process: the OK Corral process.

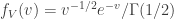

In the OK Corral process, say we start with  good and bad cowboys. We choose one cowboy uniformly at random and then he kills a cowboy on the opposite side. This process continues until one side has all been shot. This process is precisely the time reversal of the special Friedman urn process above. If we let

good and bad cowboys. We choose one cowboy uniformly at random and then he kills a cowboy on the opposite side. This process continues until one side has all been shot. This process is precisely the time reversal of the special Friedman urn process above. If we let  denote the number of surviving cowboys after the process terminates when we start with

denote the number of surviving cowboys after the process terminates when we start with  , Kingman proves that

, Kingman proves that

converges to a gamma distribution with parameter 1/2:

The weird thing about this is that the scaling is  to get an asymptotic distribution.

to get an asymptotic distribution.

Kingman and Volkov extend the analysis by embedding the urns into birth processes, which is another technique for balls-into-bins with feedback of urns with reinforcement. But that’s another story for another time (but maybe not another blog post, this one took a bit longer than I expected).